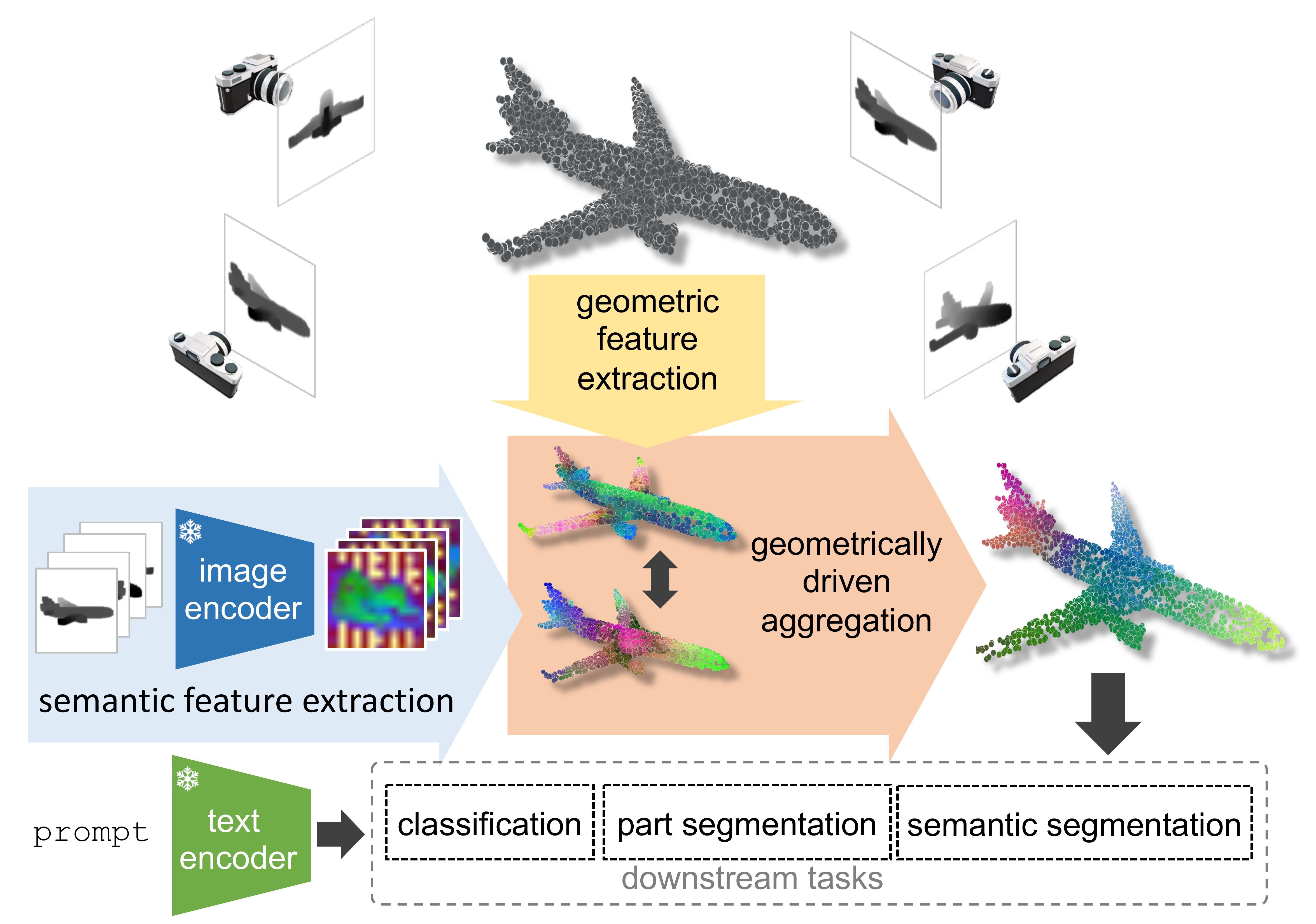

Zero-shot 3D point cloud understanding can be achieved via 2D Vision-Language Models (VLMs). Existing strategies directly map VLM representations from 2D pixels of rendered or captured views to 3D points, overlooking the inherent and expressible point cloud geometric structure.

Geometrically similar or close regions can be exploited for bolstering point cloud understanding as they are likely to share semantic information.

To this end, we introduce the first training-free aggregation technique that leverages the point cloud’s 3D geometric structure to improve the quality of the transferred VLM representations. Our approach operates iteratively, performing local-to-global aggregation based on geometric and semantic point-level reasoning.

We benchmark our approach on three downstream tasks, including classification, part segmentation, and semantic segmentation, with a variety of datasets representing both synthetic/real-world, and indoor/outdoor scenarios. Our approach achieves new state-of-the-art results in all benchmarks.

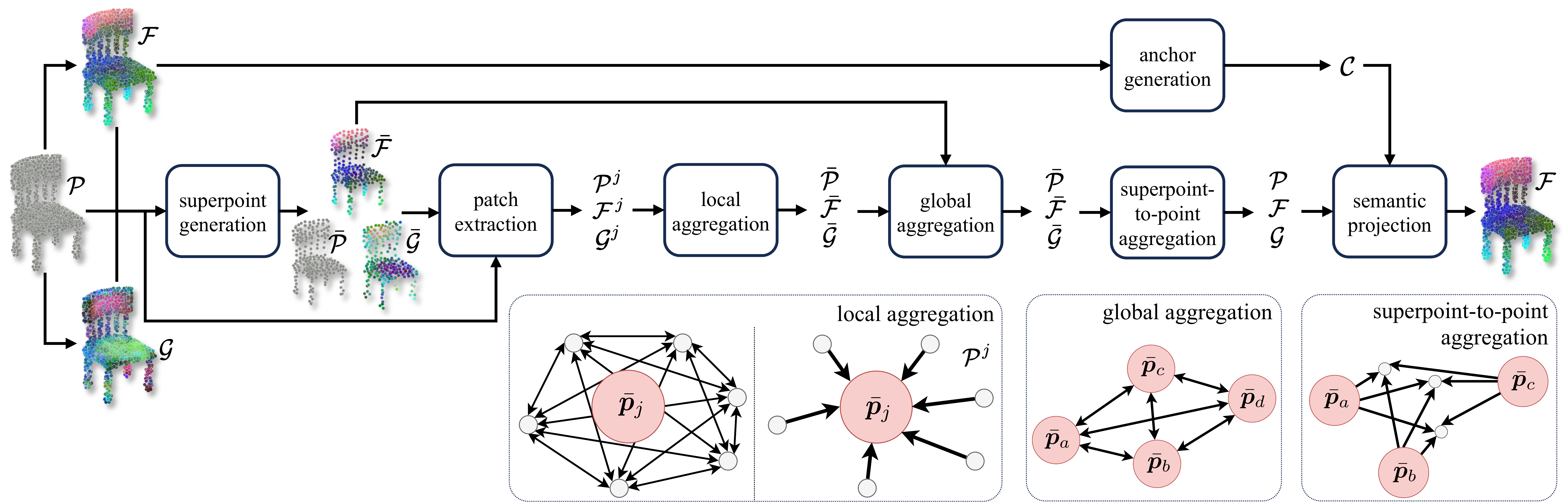

In GeoZe, we begin by extracting dense pixel-level VLM representations from each viewpoint image of a point cloud \( \bf{\mathcal{P}} \). Such images can either be rendered or they can be the original ones used for reconstruction. We project these representations onto the 3D points, obtaining \( \bf{\mathcal{F}} \). We also compute point-level geometric representations \( \bf{\mathcal{G}} \) to guide the aggregation of our VLM representations.

By utilizing point cloud coordinates and geometric representations, we generate superpoints \( \bar{\bf{\mathcal{P}}} \) along with their associated geometric and VLM representations \( \bar{\bf{\mathcal{G}}} \) and \( \bar{\bf{\mathcal{F}}} \).

For each superpoint \( \bar{\bf{p}}_j \), we identify its \(k\text{NN}\) within the point cloud to form

the patch \( \bf{\mathcal{P^j}} \).

For each patch we perform a local feature aggregation to denoise the VLM representations of each

superpoint.

Superpoints subsequently undergo a process of global aggregation to further refine their VLM

representations.

A superpoint-to-point aggregation process is finally applied to update the point representations.

We also apply Mean Shift clustering on point-level VLM representations \( \bf{\mathcal{F}} \) to establish VLM representation anchors \( \bf{\mathcal{C}} \). As a last step, we employ such VLM representation anchors to further refine point-level representations through semantic projection. At this point, the VLM representations processed by GeoZe are ready to be utilized for downstream tasks.

@inproceedings{mei2024geometrically,

title = {Geometrically-driven Aggregation for Zero-shot 3D Point Cloud Understanding},

author = {Mei, Guofeng and Riz, Luigi and Wang, Yiming and Poiesi, Fabio},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2024}

}